Christine Preizler

Chief Commercial Officer, Sigma Squared

Another week, another lawsuit questioning the use of AI in candidate screening and hiring decisions. If you’re a large organization, you’re either already using AI in hiring or planning on it. How confident are you that your approach advances core company goals and while limiting your exposure?

Last summer, a judge allowed a class action alleging that HR software provider Workday’s applicant tracking software was systemically disadvantaging applicants over forty to move forward. Last week’s lawsuit is a little different. Its plaintiffs do not allege discrimination against a particular protected class. Rather, they draw parallels between the scoring algorithms used by another applicant tracking system, Eightfold, and a much more familiar scoring system – credit scores.

“The problem of employers relying on secretive and unreliable third-party reports (or "dossiers”) when making employment decisions was a core concern Congress sought to address in passing the Fair Credit Reporting Act ("FCRA")”

The Eightfold filing lays out the ways in which the firm’s scoring system (and others like it) is material to their livelihood, completely opaque, and offers no path to remedy inaccuracies. They rely on Eightfold’s own published documentation to lay out how a proprietary large language model (“LLM”) pulls on more than 1.5 billion data points across public and private data sources in order to generate recommendations that are at once incredibly consequential for candidates and entirely opaque. They argue that using this scoring methodology without appropriately notifying candidates, explaining score methodology, and providing a path to refute or resolve a scoring issue violates the FCRA.

Not All Hiring AI is Created Equal

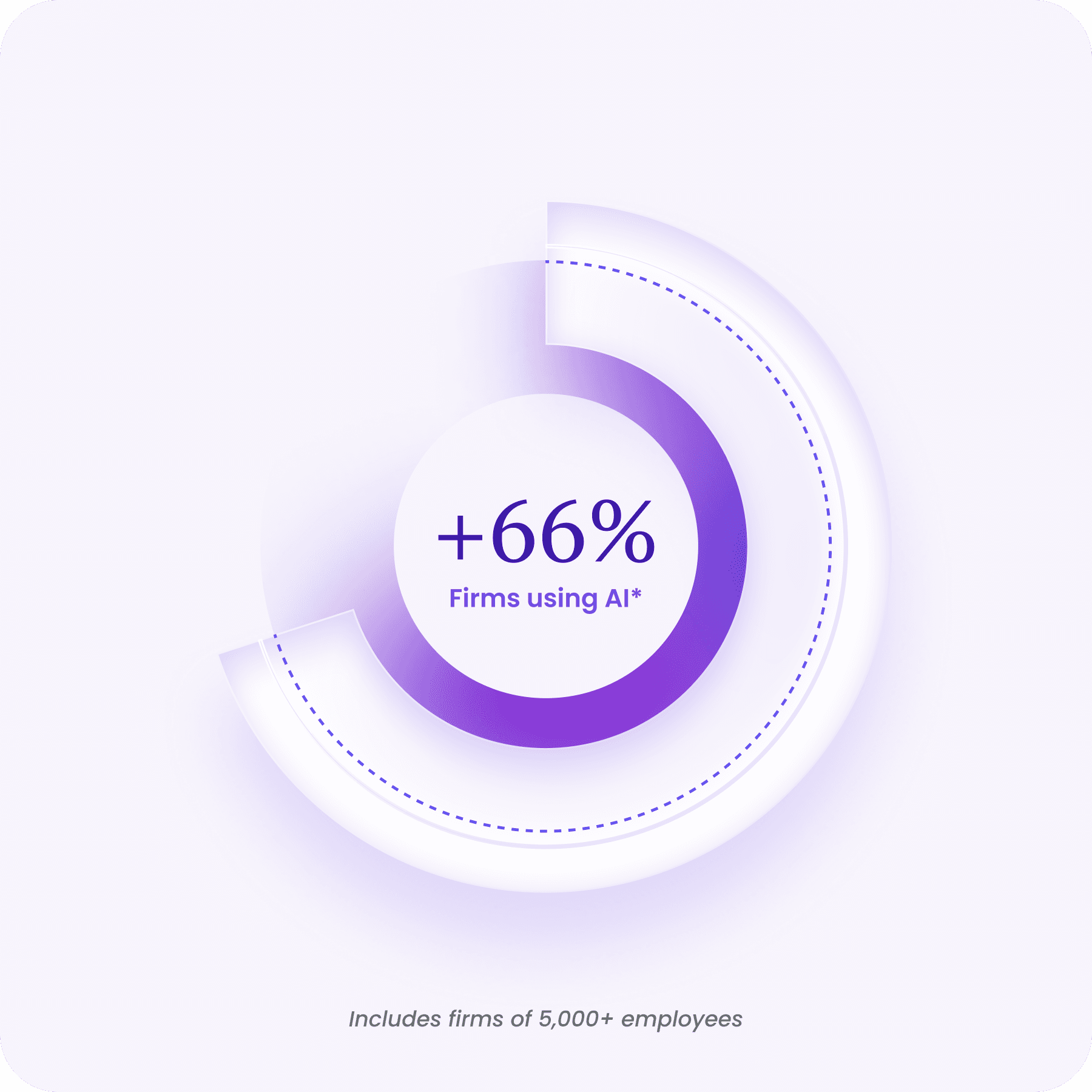

AI in hiring is not new. More than two-thirds of firms with at least 5,000 employees already use it to filter, score, rank, and hire candidates.

Most of these systems rely on two tools: keyword and pattern match. Neither are effective at improving business outcomes via talent. Keyword matching is easy to game and assumes your job description is actually predictive of performance. Pattern matching risks codifying legacy bias and unnecessarily narrowing your talent pool. Put generative AI on top of either, and you have a recipe for risk.

Outcome oriented models are a better approach. Outcome oriented models work backwards from real success criteria — like performance reviews, revenue per head, or retention. The best ones work to isolate the candidate features that are independently predictive of those outcomes, holding everything else constant. This gets you out of the world of centering intermediary process KPIs like time to hire or recruiter productivity that are easy stats to show off at a quarterly readout, but incentivize the exact wrong kind of behavior: metric attainment over merit.

Moving Backwards Isn’t an Option

Hiring faster is not always better. Hiring strong candidates as quick as is feasible, is. Productivity measured only by how rapidly a candidate arrived rather than how well they ended up performing or how long they stay is a poor yardstick. Fast at the expense of fair is not a good outcome. Focusing on interview to hire conversions, performance, and revenue per role creates better incentives.

As an aside: let’s not pretend that candidates are not also using AI in the hiring process. They are using ChatGPT to generate resumes and cover letters. They are scraping job posts and applying to hundreds at a time, and gaming keyword match systems at scale with their own automation. Everybody is ghosting everybody. We can argue over who started the application arms race, but it’s here to stay.

Getting rid of software-based filtering is not the answer. Filtering has always existed, human or otherwise. It’s gotten more important to get it right, because hiring managers are fielding more and more applicants per job. If we ask them to review each manually we know what will happen. Were we hiring more meritocratically thirty years ago?

AI Can Mitigate Bias in Hiring — When Done Right

Though we increasingly partner to deliver business outcomes, diagnosing and mitigating bias are a core competency for us here at Sigma Squared. We got our start helping the NFL understand how different career paths related to head coaching success, and how those different paths helped to shape racial disparities in the head coaching population.

To do that work, we mapped out the career paths of all ~530 people who have held headcoaching roles. Our founders took methods common to the economics and statistics departments they’d come from in order to isolate specific experiences and quantify how related each factor, holding for all the others, was to success in role.

That approach — isolating candidate features and identifying bias risk — are the foundation of the software we’ve built to help our customers facilitate effective, meritocratic hiring processes. We want to help our clients first understand what is uniquely predictive of success for a specific role, at their firm, and then codify those learnings into scalable software workflows. Not based on a massive database of random people’s information. Not based on a generative AI model coming to its own, erratic idea of what ‘success’ is. We want our clients to understand what elements of a candidate profile are and are not related to actual performance, in their actual environment so that they can hire and retain the best. It is the precision of this approach that has helped our clients reduce mis-hires by 43%, and increase in-role performance (by their own measures!) by 10% while also broadening their candidate pools.

Understanding performance relationships alone doesn’t eradicate algorithmic bias. Perhaps there is an applicant feature that does have predictive value, but it’s a direct proxy for a protected class. Amazon famously scrapped a hiring algorithm that kept penalizing women in 2018. This can happen if you have historically hired mostly men for a role (or mostly anyone). The model you train reinforces what you’ve historically done. You can re-weight the feature, but the better approach is to understand what things have a causal relationship with success holding for race, gender, and age at the outset.

What’s trickier is when an applicant feature has performance merit with an unexplained relationship to a protected class. This is where a robust risk mitigation process is vital. At Sigma Squared, it’s why we run every single applicant feature through bias tests to identify potential conflicts. All potential conflicts are human-reviewed so that they can be appropriately addressed. Deterministic, explainable models are foundational to this risk-mitigation process: if you do not know why, or cannot replicate how, a specific candidate or candidate feature was positively rated, you cannot remedy it.

Where to Start

Full transparency: I have a dog in this fight! It has taken us time to deliberately build out the approach we take at Sigma Squared. We won’t tell clients with certainty at the outset of a deployment what the secret ingredient to their hiring success is because we know we need to test it, first.

AI anxiety is not going away. The answer is not to put your business at a disadvantage by swearing it off entirely. If you’re considering incorporating AI into your hiring process or worried about the exposure your existing process already create, here’s where to start:

Define the goal.

The best talent workflows work backwards from outcomes that matter to your business. Are you trying to attract new kinds of talent? Improve revenue per head? Improve retention? Get alignment before you bring AI to the party. Then, start by identifying what actually relates to your target outcome.Understand how and where your algorithms create bias risk.

State and local laws are evolving, but they have a lot in common: they are increasingly asking employers to disclose how AI is being used and audit algorithms for bias risk. You will need a clear accounting of where your teams are using these algorithms and how those algorithms use applicant data in order to comply.Explain, explain, explain.

Generative AI creates a lot more risk than old-school machine learning here, because its outputs are not explainable or auditable. If you are making filtering, scoring, ranking, or hiring decisions using AI, you should be using deterministic models that can clearly explain decisions and relate those decisions to real performance outcomes.Consistency is key.

Clear rubrics are the foundation of fair processes. Algorithms can help you build and implement those rubrics, but how you communicate the role of and enforce the use of these tools with your people will determine if you sink or swim.

Finally, if you’ll be at Transform HR in March, be sure to catch our panel with longtime customer Tinuiti. Sigma Squared Co-Founder Tanaya Devi and I will chat with Tinuiti’s leaders about how they’ve used explainable algorithms to improve performance outcomes while also advancing the kind of meritocratic organization that is core to Tinuiti’s culture.